One way to scale a Node based system is to run multiple instances of the server. This approach also works well for Strapi because Strapi doesn't store anything in-memory on the server-side (no sticky sessions). The JWT tokens it issues persist in the database. So, any time we observe a Strapi setup struggling to handle the load of requests on the Node side, we add more instances running the same code. For setups with a predictable workload, pm2 offers a simple way to manage multiple Strapi server processes.

However, when we ran Strapi in cluster mode via pm2, we realized we needed to be careful about a few things that we didn't encounter when running a single Strapi instance:

1. Issues encountered

1.1 Strapi schema changes at startup

With Strapi, the schema is stored within our code. As a result, every time Strapi starts, it ensures the underlying database schema is brought in-sync with the schema defined in code. Additionally, any data-migration scripts (stored within `database/migration/` folder within the code repository) are run at Strapi startup (if not previously run).

Because of the above design, when we ran Strapi in cluster-mode, it caused more than one Strapi processes to trigger these database-side changes. This lead to issues we had not observed running a single Strapi instance.

1.2 Strapi cron jobs

Strapi can be [configured](https://docs.strapi.io/dev-docs/configurations/cron) to run cron jobs. This is a very helpful feature because it allows us maintain our task scheduling related setup along with our CMS setup (no separate code repository, infrastructure or devops for CMS-specific scheduled jobs).

However, when we ran Strapi in cluster-mode, each of the running Strapi instances triggered the scheduled cron jobs. As a result, on our setup with four Strapi instances, a scheduled task to trigger email alerts ended up sending four emails!

2. Solution Approach

To solve the above detailed issues, we realized we wanted a solution that would help us achieve the following:

A way for a running Strapi instance to identify itself either as a `primary` or a `secondary` server. Doing so would allow us to only have the `primary` instance perform tasks like triggering cron tasks.

A way to initialize only a single Strapi instance first. Rest of the Strapi instances should start only after the first one is up and running. This would ensure that the initialization tasks like running database migration scripts or model schema sync aren't performed more than once.

3. Implementation

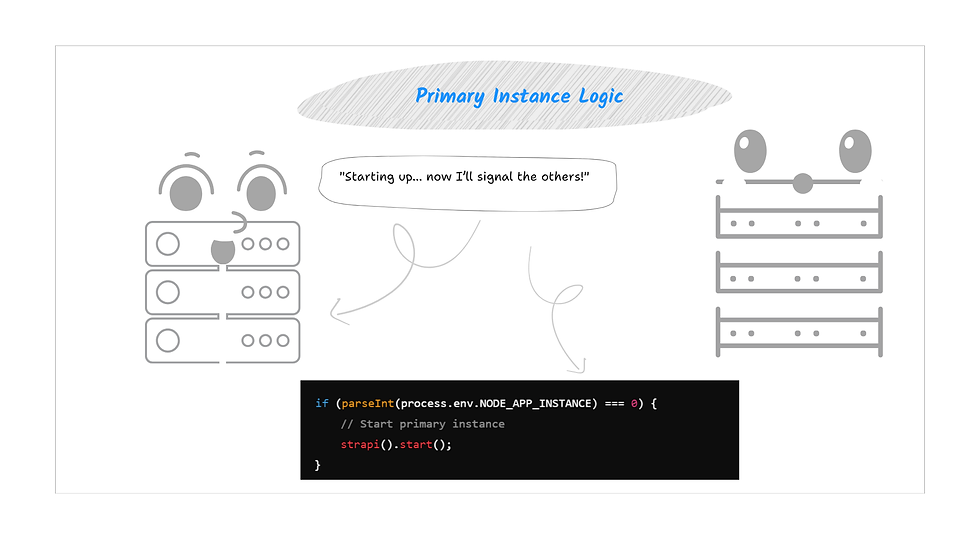

3.1 Segregating the running Strapi instances into primary & secondary

We achieved this with `pm2` variable called `NODE_APP_INSTANCE`. When `pm2` starts any node process, it assigns a unique & incrementing value to `process.env.NODE_APP_INSTANCE` to each of the started node processes. The first started instance would have the value `0`. The second instance would have the value `1` and so on. So, with the following check, a running Strapi process could identify if it was a `primary` or a `secondary`:

const { sendEmailAlerts } = require('./cronSendEmailAlerts');

module.exports = {

sendEmailAlerts: {

task: async () => {

if (typeof process.env.NODE_APP_INSTANCE === "undefined" ||

(typeof process.env.NODE_APP_INSTANCE !== "undefined" &&

parseInt(process.env.NODE_APP_INSTANCE) === 0))

return await sendEmailAlerts();

else

return false;

},

options: {

rule: "59 11 * * *",

},

} };3.2 Controlling the sequence of starting Strapi instances

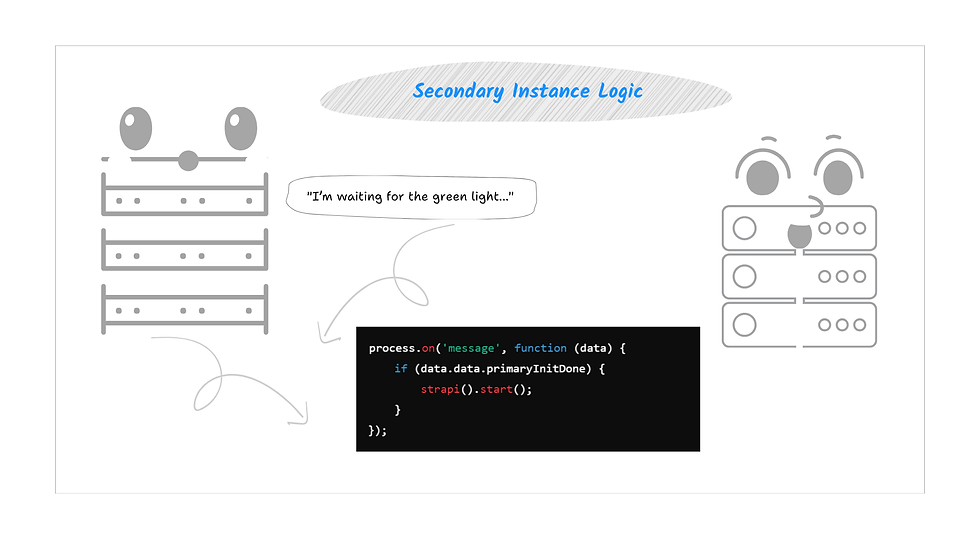

To start only a single Strapi instance first and start the other instances later, we leveraged `pm2` API called `sendDataToProcessId()`. This API enables inter-process communication between various pm2 initialized processes.

Hereby, instead of starting Strapi via the regular `strapi start`, we wrote a script where:

The first Strapi instance could start right away but the other Strapi instances would wait for a signal from the first instance.

The first Strapi instance could send a signal to rest of the Strapi instances once it is up and running.

#!/usr/bin/env node

'use strict';

const strapi = require('@strapi/strapi');

const pm2 = require('pm2')

let performStrapiStart = false;

//logic for starting the primary instance

if (parseInt(process.env.NODE_APP_INSTANCE) === 0)

{

if (!performStrapiStart)

{

//Start the primary Strapi instance

performStrapiStart = true;

strapi().start();

}

pm2.list((err, list) => {

const procStrapi = list.filter(p => p.name == process.env.PM2_APP_NAME);

//We check every 500 msec if Strapi started

const intervalCheckPrimaryInit = setInterval(function(){

//global.strapi.isLoaded turns to true once Strapi is running

if (global.strapi.isLoaded)

{

clearInterval(intervalCheckPrimaryInit);

//Time to communicate rest of the running Strapi instance processes

//to start Strapi

for (let s=0;s<procStrapi.length;s++)

{

if (parseInt(procStrapi[s].pm2_env.pm_id) !== parseInt(process.env.NODE_APP_INSTANCE))

{

pm2.sendDataToProcessId(procStrapi[s].pm_id, {

data : { primaryInitDone : true },

topic: 'process:msg',

type: 'process:msg'

}, (err, res) => {

if (err)

console.log(err)

});

}

}

}

}, 500);

});

}

//logic for starting the secondary Strapi instances

else

{

process.on('message', function (data) {

if (!performStrapiStart && data.data.primaryInitDone)

{

performStrapiStart = true;

strapi().start();

}

});

}On starting Strapi via the above script using `pm2` in cluster mode, we could now control the sequence of starting of Strapi instances.

4. Conclusion

Running Strapi in cluster mode via `pm2` allows us scale our CMS setup. But, having more than once Strapi instances running can cause some issues. Hereby, having an ability to uniquely identify each running Strapi instance and enable inter-process communication between them allows us to adequately solve any such issues resulting from a multiple-instance setup.

Comments